A big change is coming to JFreshHockey. (Sadly, not the name).

As of January 1st, 2021, I will be partnering with Patrick Bacon (a.k.a. Top Down Hockey) to fully integrate his models with my visualizations. This is something that’s been in the works for a while and I’m very excited about it - Patrick is a brilliant hockey mind and a professional data analyst, and the results of his model have been extremely impressive. He might be relatively new to the public-Twitter-analytics-guy thing, but his insights have been a major behind-the-scenes aspect of my work since Day 1.

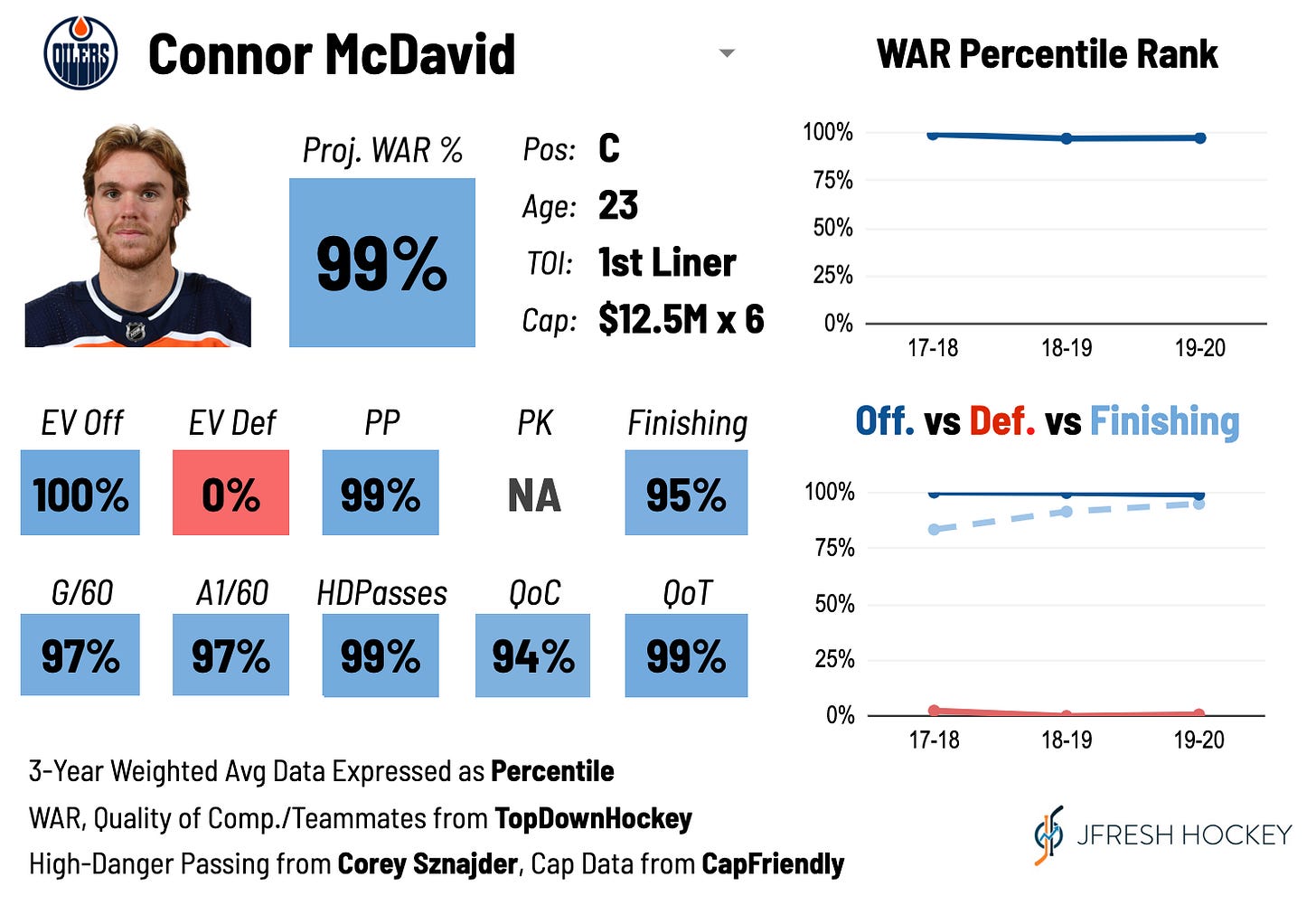

The first step of this transition is the player cards. You might have noticed some subtle differences in the ones I’ve posted lately, and that’s because they’re based on a completely different model and feature totally different stats. While they look pretty much the same, the guts are totally different. In this piece I’m going to provide a quick overview of both what the model change means in practice and how to understand and interpret what’s on the cards.

(For an explanation of the original cards to contrast, check out my original explainer from October.)

The Model Difference

There are similarities between Patrick Bacon’s WAR model and the one built by EvolvingWild, whose model was used on my previous cards and other visualizations. Both condense a player’s on-ice contributions into a single number based on their estimated on-ice impact on even strength offence and defence, special teams, and penalties. But Patrick’s model conceives of these things very differently. There are a number of changes which he outlines in his high-level (read: over my head) model explanation, but among the major ones:

It’s based on his own expected goal model, which is uniquely adjusted for arena-shot-recording bias and has performed very well. So values will be more accurate for teams like Minnesota and Tampa Bay, whose in-arena statkeepers underestimate shot location.

It uses priors, meaning every season everybody doesn’t start completely fresh - when Mark Stone hits the ice for the first game of the 2019-20 season, the model knows he’s Mark Stone and approaches how he impacts his teammates accordingly.

Offensive impact is not measured by impact on on-ice goals for (or relative goals for as an indicator), but a combination of RAPM on-ice expected goals for and individual finishing ability. This dramatically limits the number of fourth liners who look like superstars because they’re getting WAR credit for on-ice luck.

Penalty impact is not limited to individual penalties drawn and taken but an RAPM estimate of on-ice penalties drawn and taken. So for example if a player’s great possession driving leads to his opponents taking penalties, he’ll be rewarded.

Many elements of this model were developed in tandem between the two of us - by which I mostly mean I would suggest something and he would patiently explain why it was a stupid idea or point out that he had already integrated it. Overall because of the changes outlined above I am more confident in this model’s outputs and feel that it provides assessments of players that are more consistent, less vulnerable to puck luck, and more intuitive.

What are Percentiles and How are they Weighted?

My player cards present data in percentile form. Percentiles are a way of showing how a given player ranks compared to their peers. For example, if a forward has 85th percentile goals per 60 minutes, that means that they have a higher goals per 60 than 85% of forwards in the league. So an average goal scoring forward would have “50%” under G/60.

To be included in this dataset, a player needs to have played 200 minutes in a given season. This is to ensure that a certain sample size is required and, for example, that a guy who played three really good games doesn’t totally ruin the standard. With the exception of quality of competition, every stat on this card is based on a three-season weighted average, meaning that the 2019-20 season is weighted heavier than the 2018-19 season, which is weighted heavier than the 2017-18 season. That means that the results are not solely based on this past season, but the past season is the most important. When there is a large enough sample for me to build cards for the 2020-21 season, the same will be true but shifted forward by a year.

Forward Card

Context: Included to provide context are the player’s name, team, position, 2020-21 season age, and contract.

Projected Wins Above Replacement: A big difference with these cards is that the projected WAR value is now presented as a percentile as well. That’s in large part because we’re about to enter a shortened season, which means that a 4 WAR player will actually only provide about 2.7 this year. It also allows me to use more accurate projections.

Wins Above Replacement Components: The top row shows the five most important components of Patrick’s WAR model:

EV Off: Even strength offence. This is an estimate of how a player impacts his team’s even strength scoring chance generation (or expected goals for).

EV Def: Even strength defence. This is an estimate of how a player impacts his team’s even strength scoring chance against prevention (or expected goals against).

PP: Powerplay. Estimate of how a player impacts his team’s powerplay scoring chances. Player must play at least 1 minute per game on the PP to qualify.

PK: Penalty kill. Estimate of how a player impacts his team’s penalty kill scoring chance suppression. Player must play at least 1 minute of PK per game to qualify.

Finishing: An estimate of how a player contributes to his team through his ability to score on the shots he takes above what is expected based on the expected goal model.

Primary Points:

G/60: Even strength goals per 60 minutes.

A1/60: Primary assists per 60 minutes.

High-Danger Passing

HDPasses: This is a new addition. The new WAR model does not take into account teammate finishing at all. In most cases, this makes the results a lot stronger and cuts out a lot of random luck and noise. But there is evidence that some players do create better chances than expected goals can track through their passing ability. To compensate for this I’ve included three-season weighted high-danger passes, as manually tracked by Corey Sznajder.

Quality of Competition/Teammates

QoC: A measure of a player’s quality of competition based on the average time on ice of the players they play against. The variation of this stat is not particularly pronounced, as contrary to popular belief matchups are very fluid. WAR accounts for competition, and this should strictly be considered extra contextual information about how a player has been deployed by their coach.

QoT: This stat applies the same calculation to a player’s linemates. There is less variation here because it’s easier for a coach to control who a guy plays with than who a guy plays against. Once again, this is adjusted for in the metrics and should be looked at as context.

The Graphs: The graphs are based on those same WAR/EV Off/EV Def/Finishing numbers, but instead of showing a three-year sample it splits them up by season. This lets you see how a player’s impact has changed over time - did they improve or decline, did they become better defensively? The bottom graph shows three WAR components: impact on expected goals for (solid blue), against (solid red), and individual finishing (dashed light blue). The top graph shows a simple timeline of overall WAR percentile rank.

So let’s apply this to that McDavid chart. Here’s how you could interpret it.

Connor McDavid is one of, if not the best forwards in the NHL. He’s an elite offensive player at even strength and the penalty kill, super productive, has fantastic shooting and passing abilities, and is trusted in big minutes. He is also possibly the worst forward in the league when it comes to defensive play - his numbers there have been consistently awful.

Defenceman Card

Most of the stuff here is the same, with two small exceptions.

While I do show finishing WAR on the card, and I am more confident in it in Patrick’s model, I have chosen to still remove it from the overall WAR projection for defencemen. It’s simply too random and would weigh too heavily for me to consider it a valuable addition. It also doesn’t appear on the graphs for this reason.

Instead of showing High-Danger Passes, which is not super relevant for defencemen, I show their WAR Penalty impact - their impact on on-ice penalties for and against.

So let’s interpret the Hedman card pictured above.

Victor Hedman is a big-minute #1 defenceman with elite results. He’s especially a force offensively, driving scoring chances and producing very well as well. But his defensive play is solid, especially on the penalty kill. Interestingly, Hedman’s huge minute load deflates his overall quality of competition, and the Bolts’ fascination with pairing him with whatever waiver-wire RD they can scrounge up really lowers his quality of teammates.

Goalie Cards

For the most part, goalie cards are the same. Because of the scorer-adjusting, the values are more accurate than before. Some stats do come from MoneyPuck for the time being, but I will note that before these cards become available through Patreon they will be re-calculated using Patrick’s model.

Context: Name, team, age, percentage of his 2019-20 team’s games that he played in, his role based on that percentage (starter, 1A, 1B, or backup), and his contract information.

Projected WAR %: The player’s projected 2020-21 WAR ranking based on his past three seasons of work weighted.

EV: The player’s Goals Saved Above Expected per 60 minutes at even strength, weighted for the past three seasons.

PK: The player’s Goals Saved Above Expected per 60 minutes on the penalty kill, weighted for the past three seasons.

High, Medium, and Low Danger: The player’s GSAx against high, medium, and low danger shots, weighted over the past three seasons. Some consider the high danger chances to be what matters most, but this also provides some insights into the kinds of goal a goalie allows.

Start Performances: These are stats that analyze how a player performed in games they started, adapted from Rob Vollman’s “Quality Start %,” a stat which tracks if a goalie’s performance in a given game was above league average save percentage. These measures take that stat and apply “above expected” principles to it.

QS%: Quality Start %. This is the percentage of a goalie’s starts where they posted a positive goals saved above expected. Remember that on this card it is expressed as a percentile ranking - a goalie with “100%” didn’t have a perfect QS% but simply had the highest in the league.

RGS%: Really Good Start %. This is the percentage of a goalie’s starts where they saved over two goals above expected - basically where they played lights out.

RBS%: Really Bad Start %. This is the percentage of a goalie’s starts where they allowed over two goals above expected - they were a sieve.

Rebounds: This shows a goalie’s rebound control as measured by MoneyPuck’s rebounds allowed above expected.

Consistency: This shows a goalie’s year over year reliability as measured by variance.

So once more, let’s take a look at Matt Murray using this card.

Matt Murray has had a difficult time at even strength in two of the past three years. He’s quite inconsistent year-to-year and night-to-night, and rarely steals games for his team. His rebound control is solid, as is his performance against high-danger shots, but his consistently poor results against less dangerous chances is a red flag. He and the Senators will be hoping for results more in line with his 2018-19 campaign than the two surrounding it.

Conclusion

And that’s all! Like I said before, I am very excited about what this partnership means moving forward - it means I can make a lot more stuff available to you guys through Patreon and explore a lot of things that I otherwise couldn’t. The transition over to Patrick’s model will be fully complete by 2021 and there’s a lot to look forward to. Give him a follow at @TopDownHockey and I hope you enjoy this next step!

Is there a way to see the player cards for all players? Or even some of these player card stat lines in an excel or searchable table?

Great explanations, this is really helping me contextualize hockey analytics! Thank you 😃

"It uses priors, meaning every season everybody doesn’t start completely fresh" - so is it 15% 3 years ago, 35% 2 years ago, and 50% from last year?